Chapter 1 Linear regression

1.1 Introduction

1.1.1 Machine Learning

I am not talking about software engineers. I am talking about those in “physical” engineering fields such as chemical, mechanical, electrical, and so on.

- Real-world problems are modeled as standard machine learning tasks: regression, classification, etc.

- Algorithms that perform a task they have not been specifically programmed for, also called:

- Data Mining

- Big Data

- Neural Networks

- Soft Computing

- Bioinspired algorithms

Machine Learning user view

Implementing a Machine Learning technique in a real-world engineering setting usually (at least) implies:

- Realizing the application has uncertainty and cannot be solved by deterministic methods. (Engineering)

- Determining if there are enough data to use a data-driven algorithm. (Engineering -> Statistics)

- Modelling the application as a standard problem. (Engineering -> Mathematics)

- Choosing a particular solution algorithm. (Mathematics -> Computing)

- Adjusting the algorithm by setting some hyperparameters. (Mathematics -> Heuristics)

- Assessing and testing results. (Computing -> Engineering)

Real-world problems in Machine Learning

Applying Machine Learning techniques is useful for tasks that contain uncertainty:

- Traffic predictions

- Self-driving cars

- Failure prediction / fault diagnosis in Mechanical Engineering

- Load forecasting / fault detection in Electrical Engineering

- Applications of Machine Learning

- 6 Top Applications of Machine Learning

- CAPTCHAs by human o machine intelligence?

- Top 10 Applications of Machine Learning

Standard tasks in Machine Learning

- Supervised learning

- Regression

- Classification

- Unsupervised learning

- Clustering

- Dimensionality reduction

- Temporal series prediction

- Generative AI

1.1.2 The R language and the RStudio environment

- These notes are made with R Markdown, which must be used for the exercises of the assignments

- The R language has support for:

- Vectors

- Matrices

- Data frames (a subtype of list)

- Graphics

- User-defined functions

- Control flow structures (if, for, while, …)

- Vectors

- There are many packages that extend R capabilities fordput specialized tasks

1.1.3 Data Sources

- R has a lot of built-in example datasets:

- You can also download datasets from public repositories:

- Some examples of engineering regression problems:

- We consider mostly rectangular data (matrices)

1.1.4 Math recall: Probabilities

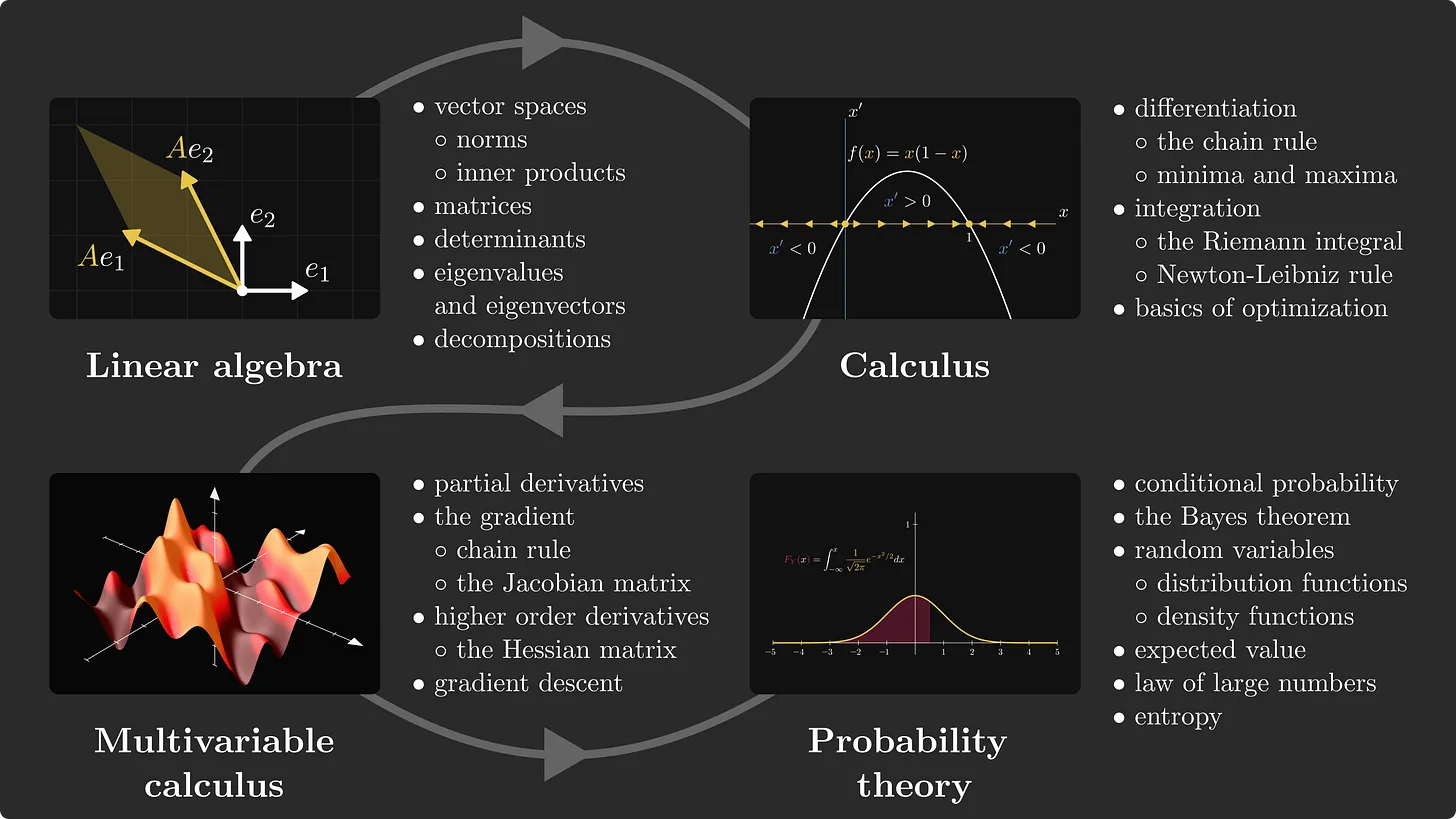

It is important that you review all the necessary Mathematics, see e.g. this web

- A probability is a function of events \(A_1, A_2,\dots\) in a set

- Each event \(A_i\) has a probability \(p_i\): \(P(A_i) = p_i\)

- Probabilities are between 0 and 1: \(0\le p_i\le 1\)

- All probabilities add up to 1: \(\sum_i p_i = 1\)

- A key concept is independence: \(P(A \text{ y } B) = P(A) P(B)\)

- Conditional probability: \(P(A|B)=\frac{P(A \text{ y } B)}{P(B)}\)

- Independence: \(P(A|B)=P(A)\)

- Correlation measures independence (but only linear!!):

1.1.5 Exploratory Data Analysis and Descriptive Statistics

Before any learning task, we must have an overall idea of our data: this is descriptive statistics

?mtcars

# Visualize

plot(mtcars$wt, mtcars$mpg)

hist(mtcars$cyl)

# Transform

mtcars$l100km = 235.215 / mtcars$mpg

with(mtcars, plot(wt, l100km)) # plot(mtcars$wt, mtcars$l100km)

mtcars[mtcars$cyl=='4', ]

mtcars[, 1:3]

# Exploratory Data Analysis

summary(mtcars)

mean(mtcars$l100km)

with(mtcars, cor(wt, l100km))

with(mtcars, cor(am, l100km)) # With qualitative variables????

any(is.na(mtcars))

# Basic methods are linear

print(mean(mtcars$mpg))

print(235.215 / mean(mtcars$mpg))

print(mean(mtcars$l100km))

with(mtcars, cor(mpg, l100km))1.1.6 Stochastic simulation

- We can also generate our own data

- This can simulate some physical phenomenon, e.g. noise

# number of samples

n = 20

# Voltage (volts)

voltage = seq(1, length.out=n)

# Resistance (ohms)

resistance = 50

# "Measure" current

current = voltage / resistance

# Add noise

noise = runif(n, min=0.9, 1.1)

current = current * noise

# Build a data frame

measures = data.frame(voltage, current)

with(measures, plot(voltage, current))1.1.7 Estimation

The simplest process in inference is the estimation of a parameter of a probability distribution, by the following process:

- Obtain a sample of the distribution \(X_1\dots X_n\)

- Assume a parametric distribution \(X\sim D(\theta)\)

- Compute an estimate of the parameter(s) \(\hat\theta\)

- Assess the estimation error, e.g. \(\hat\theta\to\theta\) as \(n\to\infty\) would be a good property

The estimate is also a random variable, so we can estimate its mean, variance, distribution, etc.

# Estimate the parameter p of a Bernoulli distribution B(p)

# Observation: if X is B(p), p = mean(X)

#

n_experiments = 100

est_media = rep(0, n_experiments)

n_samples = 10

for (i in 1:n_experiments) {

# Phase 1: create sample

muestra = rbinom(n_samples, size = 1, p = 0.5)

# Phase 2: model + estimation

est_media[i] = sum(muestra) / length(muestra)

}

cat("First estimate: ", est_media[1])

cat("Average estimate: ", mean(est_media))

cat("Variance of estimates: ", var(est_media))1.1.8 Exercises

- Download some of the examples from Kaggle or UCI

- Describe each of the variables

- Check the existence of categorical (qualitative) variables (factors)

- Check the existence of missing values (NA)

- Plot some of the numeric values

- Plot the histogram of some of the categorical variables

- Consider the uniform continuous distribution on the interval (0,p), where p is a parameter: U(0, p)

- Produce a sample distributed as U(0, p): \(X_1\dots X_n\)

- From the sample, estimate p using the estimator \(\hat{p}=\max_i(X_i)\)

- Compute the empirical mean and variance of \(\hat{p}\)

- Is \(\hat{p}\) an unbiased estimator?

1.2 Linear regression

- A linear regression is a statistical model

- The model is a relation between two variables, \(x\), \(y\): \(y=F(x)\)

- Variables are not symmetric:

- One variable (\(x\)) is visible, explanatory.

- One variable (\(y\)) is hidden, the response.

- The aim is to predict \(y\) from \(x\)

- Both \(x\) and \(y\) are assumed to come from a probability distribution

1.2.1 Regression models

- Define a family of models

- Generate a fitted model

data("cars")

modelo = lm(dist ~ speed, data=cars)

summary(modelo)

# What is R-squared?

SSyy = with(cars, sum((dist-mean(dist))**2))

SSE = sum(modelo$residuals**2)

R_2 = 1-SSE/SSyy

print(R_2)- The R command lm makes a linear model:

\[\text{dist ~ speed} \Rightarrow \qquad \text{dist}= \theta_0+\theta_1\, \text{speed}\]

- Parameters: \(\theta_0,\theta_1\)

- Fitting is computing the optimal value of parameters

- \(\theta_0\) is the intercept

- \(\theta_1\) is the coefficient of the variable speed

- Analyze the residuals

- Prediction \(\hat{y_i}=\theta_0+\theta_1\, x_i\)

- Error or residual: \(E_i=y_i-\hat{y_i}\)

1.2.2 Math recall: Regression and probabilities

This field is often called Statistical Learning

- The key of dependence is that provides information

- Regression is based in \(x\), \(y\) being dependent

- Data \(x\) is a sample from a probability distribution \(X\) (unknown)

- Regression estimates the mean of \(P(Y|X=x)\)

- Correlation does not imply causation!

- The algorithm is not to blame if data is biased.

1.2.3 Multilinear regression

The linear regression model is easily extended to several explanatory variables:

The R command lm makes a multilinear model: \(mpg = \theta \cdot (\text{disp, drat, wp})\)

Parameter vector: \(\theta\)

Fitting is computing the optimal value of parameters

- \(\theta_0\) is the intercept

- \(\theta_1\dots \theta_d\) are the coefficients of the variables

Analyze the residuals for the input vector \(x\):

- Prediction \(\hat{y_i}=\theta \cdot x_i\)

- Error or residual: \(E_i=y_i-\hat{y_i}\)

data("mtcars")

modelo = lm(mpg ~ disp + drat + wt, data=mtcars)

summary(modelo)

plot(modelo$residuals)- The signs of coefficients indicate how a variable affects the response

- The values of coefficients measure the relative importance of variables

data("mtcars")

modelo_1v = lm(mpg ~ disp, data=mtcars)

modelo_3v = lm(mpg ~ disp + drat + wt, data=mtcars)

modelo_allv = lm(mpg ~ ., data=mtcars)

print(sqrt(sum(modelo_1v$residuals^2) / nrow(mtcars)))

print(sqrt(sum(modelo_3v$residuals^2) / nrow(mtcars)))

print(sqrt(sum(modelo_allv$residuals^2) / nrow(mtcars)))We must compare if including more variables improves (decreases) the residual error

It is important to take into account the ranges of variables. If they are very different, analyzing the magnitude of coefficients is meaningless. This can be fixed by normalization or scaling:

\[X_\text{norm} = \frac{X- \bar{X}}{var(X)} \qquad X_\text{scale}=\dfrac{X-\min(X)}{\max(X)-\min(X)}\]

data("cars")

# Normalize data

cars_mat = scale(cars)

cars_normalized = as.data.frame(cars_mat)

centers = attr(cars_mat, "scaled:center")

scales = attr(cars_mat, "scaled:scale")

# Regression

modelo = lm(dist~speed, data = cars_normalized)

# Prediction

dist_hat = predict ( modelo )

dist_hat_real = dist_hat * scales[2] + centers[2]

# Plot residuals

with(cars, plot(speed, dist))

lines(cars$speed, dist_hat_real, col="red")1.2.4 Math recall: Regression and optimization

Mathematically, the solution of regression is an optimization problem:

Model: \(y=\theta_0+\theta_1\, x\)

Parameters or weights: \(\theta_0,\, \theta_1\)

Error function, cost function or residual: \[ E_{\{x,y\}}(\theta_0,\theta_1) = \sum_i \left(y_i-\theta_0-\theta_1\, x_i \right)^2 \]

Solution: \[\min_{\theta_0,\theta_1} \sum_i \left(y_i-\theta_0-\theta_1\, x_i \right)^2\]

1.2.5 Qualitative predictors

- We can use discrete, numeric variables as explanatory variables (but not as target, that would be classification instead of regression)

- Categorical variables with only two classes have a natural encoding 0-1. Choosing which is the success (1) class has no effect on regression

- Coding categorical variables with more than two classes requires some thinking

- A naive coding with integers (e.g. yellow=1, blue=2, etc.) introduces spurious quantitative relations (magnitude, order) that have no physical meaning

- You can introduce several dummy variables, one for each class with only two categories: either the instance belongs or no to the class. This is called one-hot encoding

Categorical variables are encoded automatically by lm (but be aware of what is happening!!)

Example: mtcars

- Discrete, numeric variables: cyl, gear

- Qualitative, categorical variables: vs, am

Example: iris

- Categorical variable: Species

- Warning: this is just an example. The logical application with the iris database is classification using Species as target

1.2.6 Exercises

- Download some of the examples from Kaggle or UCI

- Guess, according to the problem logic, which is the response variable \(y\)

- Select one of the continuous variables \(x\)

- Compute the linear regression y ~ x

- Compute the error (sum of squared residuals)

- Plot the data and the linear regression

- Plot the residuals

- Select one or more additional variables and compute multilinear regression

- Compute the error (sum of squared residuals)

- Discuss the relative influence of variables

1.3 Goodness of fit

1.3.1 Training error

- The fitted model gives a prediction \(\hat{y_i}=F_\theta(x_i)\)

- We measure the goodness of fit by the training error: \(\sum_i (y_i-\hat{y_i})^2\)

- Other names:

- Sum of Squared Errors (SSE)

- Sum of Squared Residuals

- We can use other measures, but we have to be consistent (always use the same), e.g.:

- Mean Squared Error (MSE) = \(\frac{SSE}{n}\)

- Root Mean Square (RMS) of residuals = \(\sqrt{MSE}\)

We can then decrease the training error by using a model with higher capacity.

1.3.2 Polynomial regression

- We can make a nonlinear model:

\[ y= \theta_0+\theta_1 x + \theta_2 x^2 + \dots + \theta_p x^p \]

- This model has higher capacity, number of parameters, etc.

- It is expected that this model produces a smaller (training) error

- But the model is still linear in the parameters

- The parameters can be computed in R with lm

data("cars")

modelo_lineal = lm(dist ~ speed, data=cars)

modelo_cuad = lm(dist ~ speed + I(speed^2), data=cars)

with(cars, plot(speed, dist))

lines(cars$speed, predict(modelo_lineal), col="red")

lines(cars$speed, predict(modelo_cuad), col="green")

cat("Error lineal = ", sqrt(sum(modelo_lineal$residuals^2) / nrow(cars)))

cat("Error cuadrático = ", sqrt(sum(modelo_cuad$residuals^2) / nrow(cars)))- Additional regressors can also be included in multilinear regression

data("mtcars")

modelo_1v = lm(mpg ~ disp, data=mtcars)

modelo_3v = lm(mpg ~ disp + drat + wt, data=mtcars)

modelo_3v_poly = lm(mpg ~ disp + drat + wt + I(disp^2) + I(drat^2) + I(wt^2), data=mtcars)

print(sqrt(sum(modelo_1v$residuals^2) / nrow(mtcars)))

print(sqrt(sum(modelo_3v$residuals^2) / nrow(mtcars)))

print(sqrt(sum(modelo_3v_poly$residuals^2) / nrow(mtcars)))

summary(modelo_3v_poly)- This is equivalent to create new columns: \(x_2=x^2, x_3=x^3\), etc.

data("cars")

X = cars$speed

for (i in 2:5) {

X = cbind(X, cars$speed^i)

}

modelo = lm(dist ~ X, data=cars)

print(sqrt(sum(modelo$residuals^2) / nrow(cars)))

plot(cars$speed, cars$dist)

lines(cars$speed, predict(modelo), col="red")- You can also add terms that are different from polynomials

data("mtcars")

# Nueva columna

mtcars$l100km = 235.215 / mtcars$mpg

modelo_1v = lm(mpg ~ disp, data=mtcars)

modelo_1v_l100km = lm(l100km ~ disp, data=mtcars)

print(sqrt(sum(modelo_1v$residuals^2) / nrow(mtcars)))

print(sqrt(sum(modelo_1v_l100km$residuals^2) / nrow(mtcars))

with(mtcars, plot(disp, mpg))

lines(mtcars$disp, predict(modelo_1v))

with(mtcars, plot(disp, l100km))

lines(mtcars$disp, predict(modelo_1v_l100km))- If columns are not linearly independent there are numerical instabilities

data("cars")

cars$speedkmh = 1.60934 * cars$speed

# . = Resto de columnas!!

modelo = lm(dist ~ ., data=cars)

with(cars, plot(speed, dist))

summary(modelo)- In principle, columns can be added up to arbitrary degrees

# Try different degrees 10, 20, etc.

max_degree = 10

#

data("cars")

X = cars$speed

for (i in 2:max_degree) {

X = cbind(X, cars$speed^i)

}

modelo = lm(dist ~ X, data=cars)

print(sqrt(sum(modelo$residuals^2) / nrow(cars)))

plot(cars$speed, cars$dist)

lines(cars$speed, predict(modelo), col="red")

summary(modelo)1.3.3 Math recall: Regression and Linear Algebra

It is easier to use matrix notation, in particular when we have multilinear models. Based on a data matrix \(\tilde{x}=(1,x)\):

Model: \(y= \tilde{x} \, \theta\)

Parameters or weights: vector \(\theta\)

Error function, cost function or residual: \[ E_{\{\tilde{x},y\}}(\theta) = \sum_i \left(y_i-\theta\, \tilde{x}_i \right)^2 = \|Y-X\, \theta\|\]

Solution: \[\min_{\theta} \sum_i \left(y_i-\theta \, \tilde{x}_i \right)^2 = \min_{\theta} \|Y-X \,\theta\|^2 \]

The solution for the linear model \(Y=X\,\theta\) is given by the normal equations: \[\theta^*=(X^\top\,X)^{-1}\,X^\top\,Y\] where \(X^+=(X^\top\,X)^{-1}\,X^\top\) is the Moore-Penrose pseudo-inverse.

If the matrix \(X\) has \(m\) columns, the size of \((X^\top\,X)^{-1}\) is \(m\times m\)

The effect of adding columns is increasing \(m\)

If we add columns up to \(m=n\), then the matrix \(X\):

- is square

- is invertible if its columns are linearly independent (full rank)

If \(X\) is invertible, the system \(Y=X\theta\) has exact solution and the error is zero

If we add columns up to \(m>n\), then

- \(rank(X)\le n < m\)

- \(rank((X^\top\,X)^{-1})=rank(X)< m\)

- \((X^\top\,X)^{-1}\) is singular (not invertible)

Example: the matrix \(X\) of the cars database is \(n\times m\)

- Columns \(m=2\): speed and 1 (\(\theta_0\cdot 1=\theta_0\) is the intercept)

- Rows \(n=50\)

- So its rank is…

- But there are repeated (linearly dependent) rows: \(rank(X)<n\)

- In practice, we will see numerical instabilities much before reaching \(m=n\)

1.3.4 Exercises

- Download some of the examples from Kaggle or UCI

- Select several continuous columns to perform multilinear regression

- Try at least three combinations of higher order terms

- For each combination of regressors, check the training error

- By adding too many columns, try to find a combination where singularities appear

1.4 Generalization and overfitting

1.4.1 Training error

- The quality of a model is measured by the training error: \(\sum_i (y_i -\hat{y_i})^2\)

- A model with high training error is underfitting

- In theory, training error tends to zero by using a basis of funtions, e.g. polynomials (Stone-Weierstrass theorem)

- Statistical framework: data comes from sampling a probability distribution

# Ohm's law is an empirical law

# number of samples

n_samples = 20

# Voltage (volts) (sort only for plotting)

voltage = runif(n_samples, min=50, max=100)

voltage = sort(voltage)

# Resistance (ohms)

resistance = 50

# "Measure" current

current = voltage / resistance

# Add noise

noise = runif(n_samples, min=0.8, 1.2)

current = current * noise

# Build a data frame

measures = data.frame(voltage, current)

with(measures, plot(voltage, current))

# Compute a linear model

modelo = lm(current ~ voltage, data=measures)

print(sqrt(sum(modelo$residuals^2) / nrow(measures)))

lines(voltage, predict(modelo), col="red")

# Increase model capacity

degree = 6

normaliza = rep(1, degree)

for (i in 2:degree) {

normaliza[i] = max(voltage^i)

measures = cbind(measures, voltage^i / normaliza[i])

colnames(measures)[i+1] = paste("voltage", i, sep="")

}

modelo_poly = lm(current ~ ., data=measures)

print(sqrt(sum(modelo_poly$residuals^2) / nrow(measures)))

puntos = seq(50, 100, 0.1)

newdata = as.data.frame(puntos)

colnames(newdata) = "voltage"

newdata = cbind(newdata, rep(0,length(puntos)))

colnames(newdata)[2] = "current"

for (i in 2:degree) {

newdata = cbind(newdata, puntos^i / normaliza[i])

colnames(newdata)[i+1] = paste("voltage", i, sep="")

}

currents = predict(modelo_poly, newdata)

max_cur = max(currents)

min_cur = min(currents)

with(measures, plot(voltage, current, xlim=c(50,100), ylim=c(min_cur, max_cur)))

lines(puntos, currents, col="red",

type = "l", xlim=c(50,100), ylim=c(min_cur, max_cur))1.4.2 Test error

- Once the model is built, the final aim is prediction

- We have to check the error on data that has not been used for regression

- We can simulate these data by a test set

- If the model gives low training error and high test error, it is overfitting

The key method to check for overfitting is to divide the dataset into training and validation sets and check for validation errors rather than test errors.

# Ohm's law is an empirical law

# number of samples

n_samples = 40

voltage = runif(n_samples, min=50, max=100)

# Resistance (ohms)

resistance = 50

# "Measure" current

current = voltage / resistance

# Add noise

noise = runif(n_samples, min=0.8, 1.2)

current = current * noise

# Build a data frame

measures = data.frame(voltage, current)

# Augment data

degree = 20

for (i in 2:degree) {

measures = cbind(measures, voltage^i / max(voltage^i))

colnames(measures)[i+1] = paste("voltage", i, sep="")

}

# Separate training and test datasets

# sample.split: package caTools

library(caTools)

indices = sample.split(measures$current)

measures_train = measures[indices,]

measures_test = measures[! indices,]

# Compute linear and polynomial model

modelo = lm(current ~ voltage, data=measures_train)

modelo_poly = lm(current ~ ., data=measures_train)

# Compare errors

cat("Train / lineal:", sqrt(sum(modelo$residuals^2) / nrow(measures_train)), "\n")

cat("Train / poli:", sqrt(sum(modelo_poly$residuals^2) / nrow(measures_train)), "\n")

predict_lineal = predict(modelo, newdata=measures_test)

predict_poly = predict(modelo_poly, newdata=measures_test)

error_test_lineal = sqrt(sum((measures_test$current - predict_lineal)^2) / nrow(measures_test))

error_test_poly = sqrt(sum((measures_test$current - predict_poly)^2) / nrow(measures_test))

cat("Test / lineal:", error_test_lineal, "\n")

cat("Test / poli:", error_test_poly, "\n")

summary(modelo_poly$coefficients)- When data is limited, we should try different partitions and average results (resampling)

- Setting the proportion of data used for test is heuristic

data("mtcars")

# Partition data

# sample.split: package caTools

indices = sample.split(mtcars$mpg, SplitRatio=0.7)

mtcars_train = mtcars[indices,]

mtcars_test = mtcars[! indices,]

# Fit the model

modelo = lm(mpg ~ disp + drat + wt, data=mtcars_train)

# Check errors

cat("Train:", sqrt(sum(modelo$residuals^2) / nrow(mtcars_train)), "\n")

prediction = predict(modelo, newdata=mtcars_test)

error_test = sqrt(sum((mtcars_test$mpg - prediction)^2) / nrow(mtcars_test))

cat("Test:", error_test, "\n")1.5 Assignment

- Select a real-world problem

- Explain the problem as a regression task and the expected result

- Download and describe data. Classify columns as numerical or categorical

- Select the features that will be used in the regression

- Partition data into training and test datasets

- Perform regression by using the lm R command.

- Analyze both types of error.

- Change the model, e.g. adding polynomial terms and check what happens to both errors.